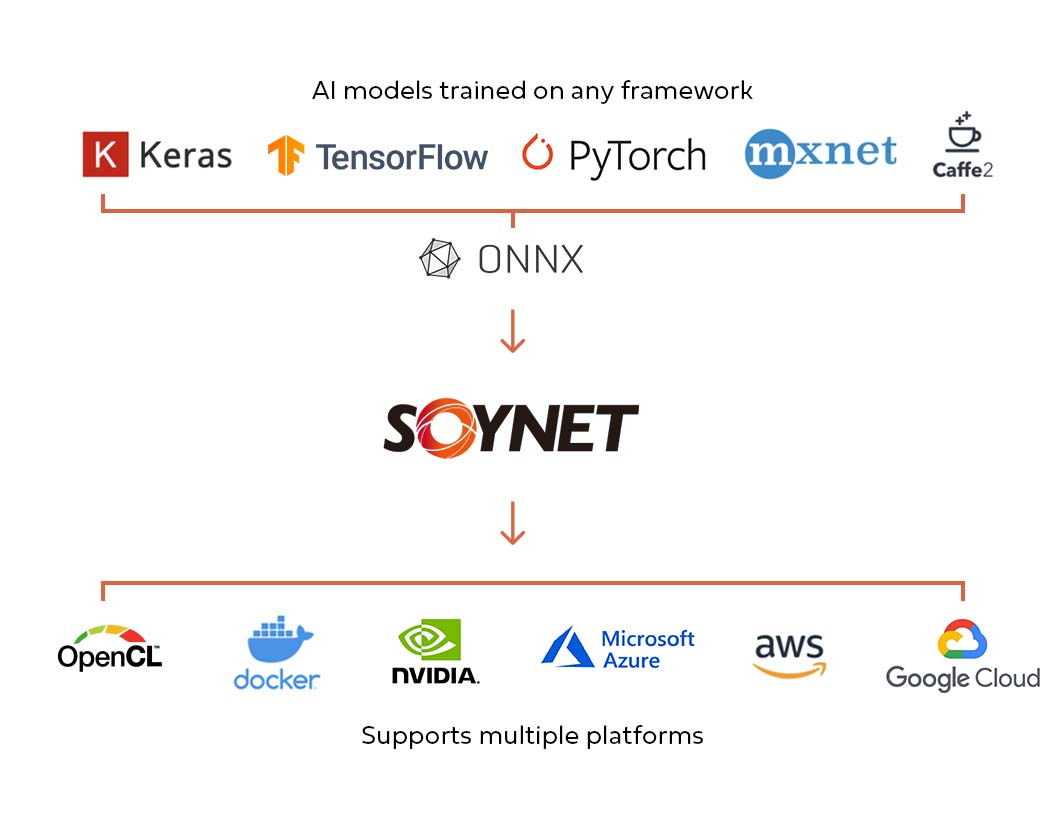

Speeding Up Deep Learning Inference Using TensorFlow, ONNX, and NVIDIA TensorRT | NVIDIA Technical Blog

Opencl][ONNX] Failing to Compile the ONNX model at optimisation level greater than 0 on opencl · Issue #2859 · apache/tvm · GitHub

Speeding Up Deep Learning Inference Using TensorFlow, ONNX, and NVIDIA TensorRT | NVIDIA Technical Blog